Recurrent Neural Network (RNN) are a special type of feed-forward network used for sequential data analysis where inputs are not independent and are not of fixed length as is assumed in some of the other neural networks such as MLP. Rather in this case, inputs are dependent on each other along the time dimension. In other words, what happens in time ‘t’ may depend on what happened in time ‘t-1’, ‘t-2’ and so on.

These are also called ‘memory’ networks as previous inputs and states persist in the model for doing a more optimal sequential analysis. They can have both short term and long term time dependence. Due to their capabilities of handling sequential data very well, these networks are typically very suitable for speech recognition, sentiment analysis, forecasting, language translation and other such applications.

Let’s now spend sometime looking at how a RNN work-

Recurrent Neural Network (RNN)

As you may recall, in a typical feed-forward neural network input is fed at beginning and then hidden layers do the processing and finally output layer spits out the output. On the other hand, in a RNN generally speaking we will have different input, output and cost function for each time stamp. However, the same weight matrix is fed to all layers in the network.

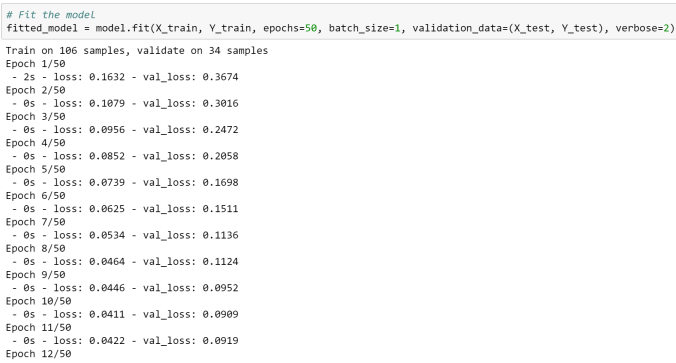

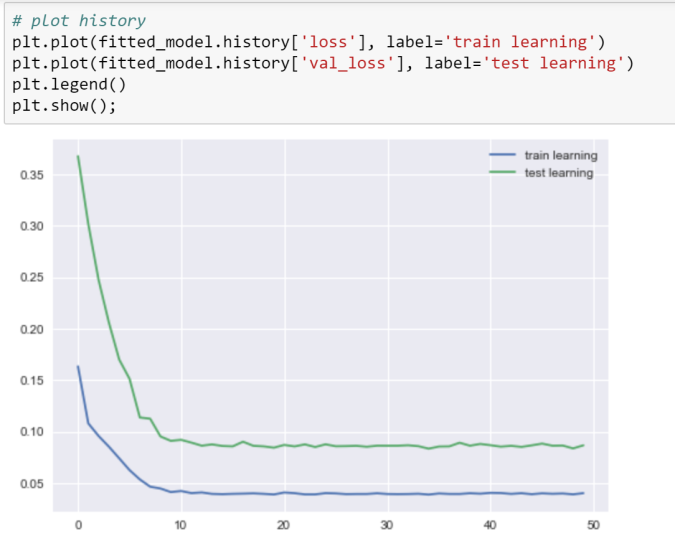

One point to note is that RNNs are also trained using backward propagation of errors and gradient descent to minimize cost function. However, backward propagation in RNN happen over different time stamps and hence it’s called Backward Propagation Through Time (BPTT). In a typical RNN, we may have several time stamp layers which sometimes may range in hundreds or thousands and therein lies the problem of vanishing gradient or exploding gradient that these pure vanilla RNNs are particularly susceptible for.

There are various techniques such as gradient clipping and architecture such as Long Short Term Memory (LSTM) or Gated Recurrent Unit (GRU) which help in fixing the vanishing gradient and exploding gradient issues. We will delve deeper into how an LSTM work.

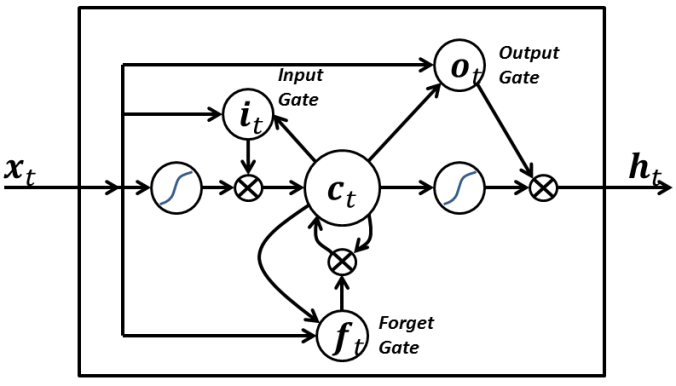

A LSTM network consist of hidden layers that have many LSTM blocks or units. In turn each LSTM unit will have the following components-

- Memory Cell- The component that remembers the values over a period of time. This has an activation function

- Input gate- Enables addition of info to the memory cell. Generally has as an tanh activation to squash the values between -1 and +1

- Forget gate- Enables removing or retaining from the memory cell. This will generally have a sigmoid activation function and hence the output values will range between 0 and 1. If the gate is on, then all memories are retained. If the gate is turned-off, all values will be removed.

- Output gate- Retrieve information from the memory cell passed through the tanh activation

Long Short Term Memory Cell or Block (Source- Wiki)

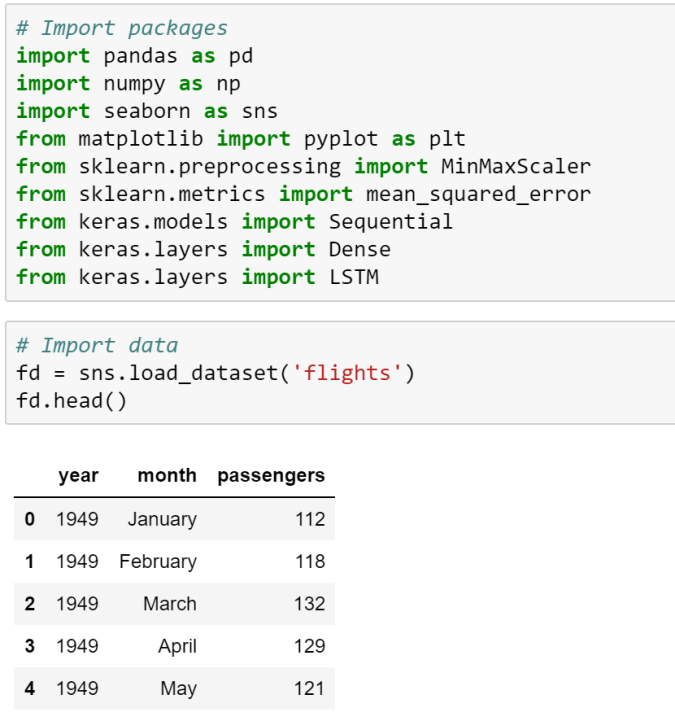

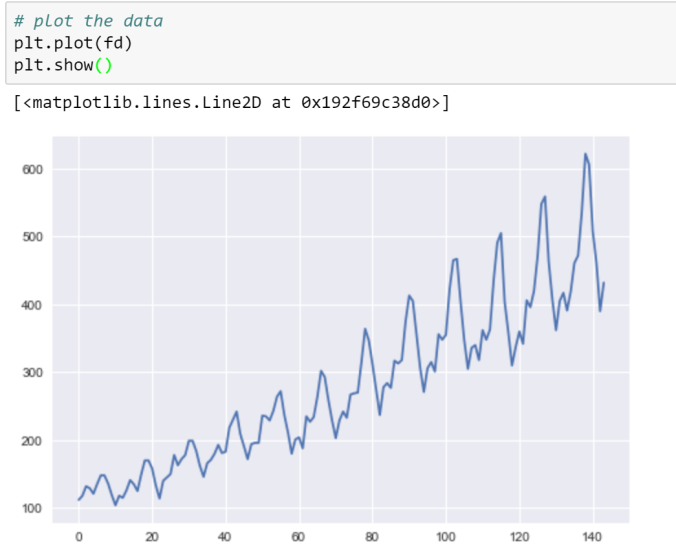

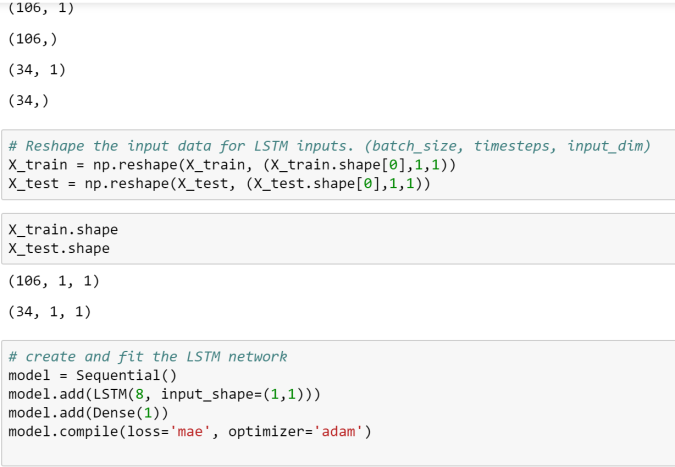

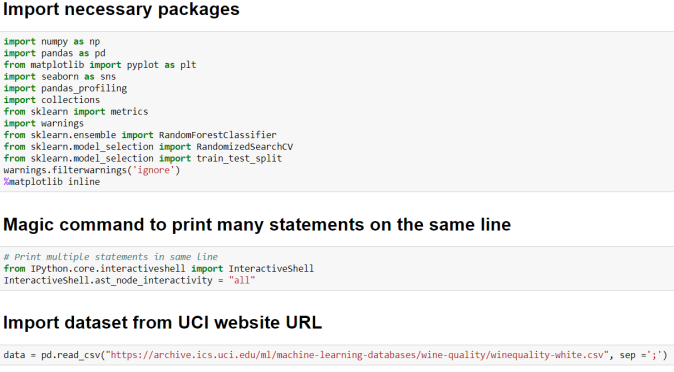

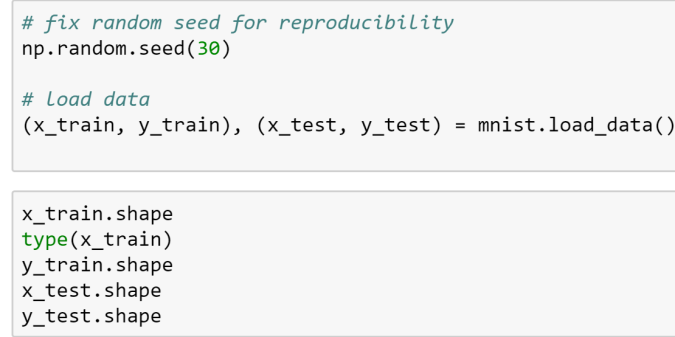

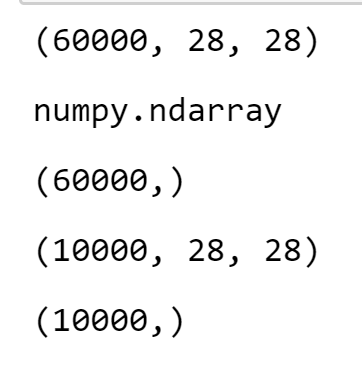

Let’s work through an example which we used in a previous article.

Here is an excellent article in case you want to explore more.

Cheers!

You must be logged in to post a comment.